Prefetching Technique Focusing on Timing

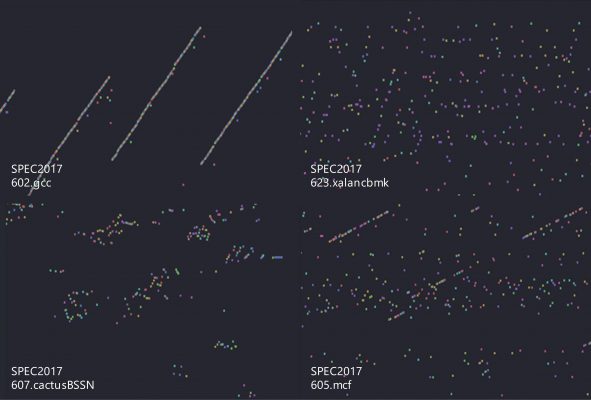

To obtain the best performance from the processor, it is important that the data/instructions to be processed reside in the cache memory. However, in exchange for high speed, cache memory has a small capacity and can only retain some portion of the data/instructions. Therefore, a prefetching technique that predicts future data/instructions and moves them to cache memory has been widely studied. However, existing research has been only limited to predicting which data/instructions should be prefetched. In response, we introduced the concept of prefetch timing. Our proposed method based on this idea achieves timely prefetch issuing and the highest performance in the world. As a special feature of our research, we created a memory access pattern visualization tool called sazanami, which we use in our research (see the figure above).

- Tomoki Nakamura,Toru Koizumi, Yuya Degawa, Hidetsugu Irie, Suichi Sakai and Ryota Shioya: “T-SKID: Timing Skid Prefetcher”, The Third Data Prefetching Championship (in conjunction with ISCA 2019), June, 2019.

- Tomoki Nakamura, Toru Koizumi, Yuya Degawa, Hidetsugu Irie, Shuichi Sakai, Ryota Shioya, “D-JOLT: Distant Jolt Prefetcher”, The 1st Instruction Prefetching Championship (in conjunction with ISCA 2020), pp. 1–5, June, 2020. (3rd place)

- 中村 朋生, 小泉 透, 出川 祐也, 入江 英嗣, 坂井 修一, 塩谷 亮太: 「プリフェッチ距離の性質に着目した命令プリフェッチャ」, 電子情報通信学会技術研究報告, Vol. 120, No. 121, pp. 1–8, Jul., 2020.(山下記念研究賞, 研究会優秀若手発表賞)

- 小泉 透, 中村 朋生, 出川 祐也, 入江 英嗣, 坂井 修一, 塩谷 亮太: 「アドレスとタイミングの予測を分離したデータプリフェッチャ」, 情報処理学会研究報告, Vol. 2022-ARC-248, No. 17, pp. 1–8, Mar., 2022.

- Toru Koizumi, Tomoki Nakamura, Yuya Degawa, Hidetsugu Irie, Shuichi Sakai, Ryota Shioya: “T-SKID: Predicting When to Prefetch Separately from Address Prediction”, Design, Automation and Test in Europe Conference, pp. 1393–1398, Mar., 2022.